AWS Lambda is a serverless compute service that lets cloud application development teams run code without provisioning or managing servers. While Lambda natively supports several programming languages, developers often face limitations with dependency management and runtime constraints. This is where Docker containers come to the rescue. By packaging the Lambda function as a Docker image, developers can overcome these limitations, manage complex dependencies, and use custom runtimes that Lambda doesn’t natively support.

The architecture for containerized Lambda functions follows a straightforward pattern. The Docker image is stored in an Amazon Elastic Container Registry (ECR) repository, a fully managed container registry service. When the AWS Lambda function is deployed, the container image is pulled from Amazon ECR, and an execution environment is created. For subsequent invocations, Lambda reuses existing execution environments when possible, without pulling the image again. This approach provides the benefits of containers –packaging code, runtime, dependencies, and configurations together – while maintaining Lambda’s quick start capabilities and serverless benefits. The separation between image storage in ECR and execution in Lambda provides flexibility and scalability for serverless applications.

In this note, we’ll learn how to deploy a Docker image into an AWS Lambda function and automate the process using Terraform and GitHub Actions.

This high-level objective is further broken down into four smaller stages:

Stage 1: Provision the infrastructure to host the Docker image

Stage 2: Build and push the Docker image into Amazon ECR

Stage 3: Deploy the Docker image into AWS Lambda

Stage 4: Automating with GitHub Actions.

Let us now delve into each of these objectives. If you are interested and want to follow along, please refer to the GitHub repository: kunduso/aws-lambda-docker-terraform.

Stage 1: Provision the infrastructure to host the Docker image

This stage creates an Amazon ECR repository to store the Docker image. Amazon ECR (Elastic Container Registry) is AWS’s fully managed Docker container registry service. In this project, it serves as the storage location for the containerized Lambda function. The Amazon ECR repository is further associated with a custom KMS key to encrypt the Docker images. The Terraform code is in the terraform_ecr folder. The steps under this stage are:

Step 1: Create a custom KMS key and policy

This Terraform code creates an AWS KMS key and a key policy to encrypt Docker images stored in Amazon ECR.

Step 2: Create an Amazon ECR repository and enable image encryption using the custom KMS key

An Amazon ECR repository is where the Docker container images are stored.

The image_tag_mutability property states whether the same image tag can be reused. Setting it to IMMUTABLE = same image tag cannot be reused, which implies that each image gets a unique tag. The encryption_configuration associates the custom KMS key with the Amazon ECR repository.

Stage 2: Build and push the Docker image into Amazon ECR

This is covered in detail in a separate note that you may read at -build-and-push-docker-image-to-amazon-ecr-using-github-actions. The code is stored in the lambda_src folder.

Stage 3: Deploy the Docker image into AWS Lambda

The last stage is to create an AWS Lambda function from the Docker image stored in Amazon ECR. The Terraform code is in the terraform_lambda folder. To follow security, resiliency, and observability best practices, the lambda function’s environment variables are encrypted using a custom KMS key, the event logs are stored in an AWS CloudWatch log group, and a dead letter queue is set up for error handling and debugging. Additionally, the SQS queue and the CloudWatch log group are encrypted using custom KMS keys. Tying all of these together, the steps in this stage are as follows.

Step 1: Create a KMS key and policy for Amazon SQS

This Terraform code creates an AWS KMS key and a key policy to encrypt the SQS queue.

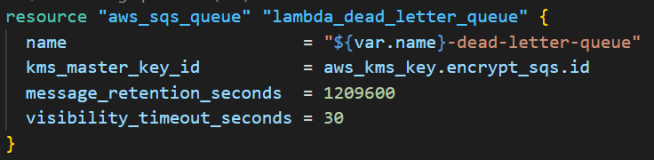

Step 2: Create the SQS queue

The SQS queue serves as a Dead Letter Queue (DLQ) for the Lambda function. When the Lambda function fails after all retry attempts, the failed event is sent to the DLQ instead of being lost for further analysis.

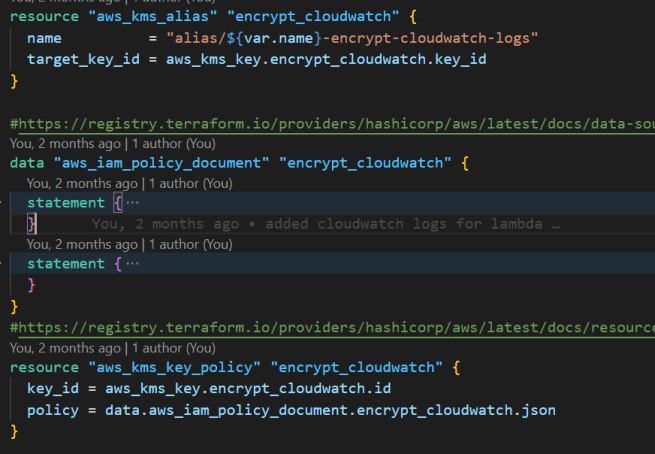

Step 3: Create a KMS key and policy for the Amazon CloudWatch Log group

This Terraform code creates an AWS KMS key and a key policy to encrypt the CloudWatch Log group.

Step 4: Create an Amazon CloudWatch Log group

The log group stores messages from the AWS Lambda execution. These messages are log information, which is helpful during review and debugging.

Step 5: Create an AWS IAM role and policy

AWS Lambda requires an IAM role to execute it. An IAM role has two policies: the assume role policy and the permission policy. The assume role policy states who or what can assume that role, and the permission policy states what operations that role can perform on which AWS cloud resources. Below is the code for the assume role policy, which states that the service principal lambda.amazonaws.com can assume the role.

After creating the role, it is associated with a managed IAM policy arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole and a couple of custom IAM policies that provide it with permissions stated in that policy. In this case, the custom policies are to pull the Docker image from Amazon ECR and to send messages to the Amazon SQS queue.

Step 6: Create the Lambda function

The AWS Lambda function consumes the Docker container image stored in Amazon ECR through the image_uri parameter. It also requires the IAM role that we created previously. This IAM role provides limited access to the AWS Lambda function, allowing it to perform specific operations, including pulling images from ECR and sending messages to the dead letter queue. The package_type is set to “Image”, which indicates this is a containerized Lambda function rather than a traditional zip-based deployment. The AWS KMS key is required to encrypt the environment variables used in the function. The logging_config stores the log_group information to store the execution logs, along with the log_format set to “JSON” and system_log_level set to “INFO” for structured logging. The dead_letter_config specifies the SQS queue where failed invocation events are sent for error handling and debugging purposes. Finally, the environment variables section passes the variables shared with the function, though in this case, it’s empty as configuration is handled within the container image.

Those are all the AWS Cloud resources required to create a Lambda function with a Docker image. Now, let’s understand the provisioning process in the next section.

Stage 4: Automating with GitHub Actions

To fully automate this deployment workflow, a GitHub Actions pipeline orchestrates the entire process with three dependent jobs:

– ecr_create: Provisions the Amazon ECR repository using Terraform.

– docker_build_push: Builds the Docker image, tags it, and pushes it to ECR.

– deploy_lambda: Deploys the Lambda function from the container image stored in ECR using Terraform.

Each job passes critical information to the next job:

– the ECR repository URI from the first job is used to tag and push the image in the second job

– the image tag from the second job is referenced in the third to deploy the exact version to Lambda.

The workflow is designed to run terraform apply only when changes are merged into the main branch. This ensures all infrastructure modifications are reviewed through pull requests, maintaining best practices for CI/CD and infrastructure as code.

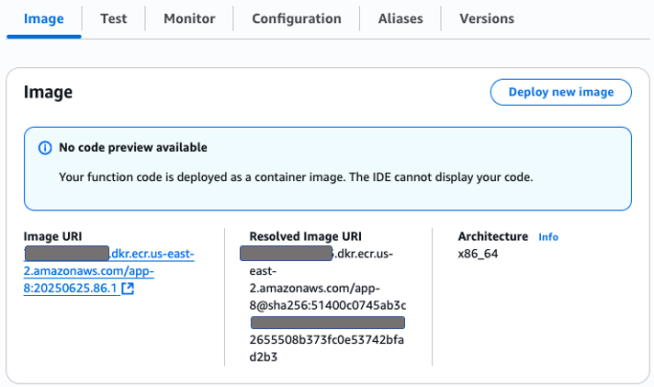

After the pipeline was executed, the Amazon ECR repository was created. Then, the Docker image was created, tagged, and pushed into the Amazon ECR Repository. Finally, the Lambda function was successfully created. The image below shows the Image URI property of the Lambda function.

Once the lambda function was deployed, a quick test resulted in the successful message as set in the handler.py file stored inside the Docker container.

That brings us to the end of this note on how to securely deploy a Docker image from Amazon ECR to AWS Lambda using Terraform and GitHub Actions. By combining Docker, Terraform, and GitHub Actions, this approach offers a robust, secure, and repeatable way to deploy AWS Lambda functions. It removes the constraints of native Lambda runtimes, provides encryption and logging best practices, and promotes a DevOps-friendly pipeline through infrastructure-as-code and CI/CD automation.