GitHub, in addition to being an excellent version control system, offers workflow automation capabilities (Actions) that enable testing, building, and deploying code based on triggers such as code commits, pull requests, or scheduled events. These workflows run on runners, which are virtual or physical machines that execute these workflow steps.

While GitHub offers free hosted runners for common automation tasks, with unlimited minutes for public repos, there’s a limit (2,000 minutes/month) on private repositories. Hence, organizations often prefer self-hosted runners in their own infrastructure. There are several other reasons for doing so, including enhanced security and compliance, access to private networks and resources, custom software requirements, improved performance, and cost efficiencies.

As of August 2025, GitHub offers multiple account types, including personal/individual, organization, and enterprise accounts. While self-hosted runners can be created in individual accounts, they are repository-scoped and usable only within the repository where they’re registered. Alternatively, if a self-hosted runner is created in an organization (or enterprise), it can be configured to be used by selected repositories or all repositories belonging to that organization (or enterprise). Consequently, organization-level self-hosted runners offer superior scalability and cost-effectiveness by serving multiple repositories from a shared infrastructure pool compared to individual repository runners.

In this note, we’ll learn how to securely build self-hosted runners on Amazon EC2 instances using auto scaling groups and Terraform.

This solution adheres to several security best practices. If you are interested in following along with the code, please refer to the GitHub repository: kunduso-org/github-self-hosted-runner-amazon-ec2-terraform.

Solution Overview:

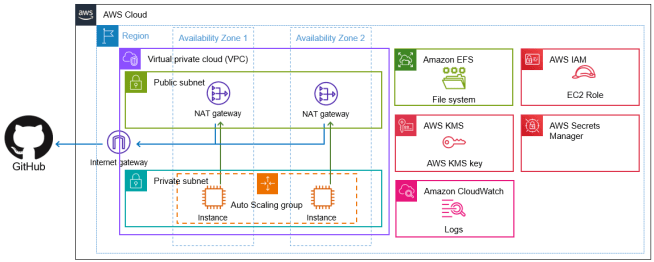

This solution deploys GitHub self-hosted runners using an Auto Scaling Group of Amazon EC2 instances within a secure, multi-AZ VPC architecture. The infrastructure spans two availability zones, with public and private subnets. Runners operate in private subnets, which have outbound internet access through NAT gateways for enhanced security.

Key components include encrypted EFS storage for persistent workspace data, customer-managed KMS keys for comprehensive encryption, and AWS Secrets Manager for secure GitHub App credential storage. CloudWatch log groups provide structured logging throughout the runner lifecycle, capturing registration, execution, and operational events for monitoring and troubleshooting.

Configuration management is handled through SSM Parameter Store, which securely stores the deregistration script that ensures proper cleanup when instances terminate. The GitHub runner registration process is automated through a user data script that executes during EC2 instance launch, handling authentication, workspace setup, and service configuration.

The architecture ensures high availability, security, and scalability while maintaining cost efficiency through the use of shared infrastructure and persistent storage.

Prerequisites

This use case requires two prerequisites. These are:

PreReq-1. Administrative access to an AWS account to deploy the AWS cloud resources and configure IAM roles for GitHub Actions integration

PreReq-2. Administrative access to a GitHub organization or enterprise to create GitHub Apps and manage self-hosted runners

Implementation

This implementation has two sequential steps:

Implementation-1. Set up the GitHub App: Configuring authentication credentials for secure API access, and

Implementation-2. Provision Runner Infrastructure: Creating AWS cloud resources to support GitHub runner infrastructure, such as Virtual Private Cloud (VPC) and Auto Scaling Groups.

Additionally, as part of de-registration and cleanup, a subsequent step can also be added:

Implementation-3. Lifecycle Management: Automated de-registration using Lambda and lifecycle hooks. Covered in the note –Automated GitHub Self-Hosted Runner Cleanup: Lambda Functions and Auto Scaling Lifecycle Hooks.

Let’s dive deeper into each, starting with setting up the GitHub App.

Implementation-1. GitHub App Setup

A GitHub App is a secure authentication mechanism that allows external applications and services to interact with GitHub’s API on behalf of an organization or user. Unlike personal access tokens, GitHub Apps use short-lived JWT tokens and provide fine-grained permissions, making them the recommended approach for automated systems and integrations.

For self-hosted runner authentication, GitHub Apps require three essential components that work together in a secure authentication flow. The App ID serves as the unique identifier for the GitHub App within the GitHub ecosystem. The private key (certificate) is used to cryptographically sign JWT tokens, proving the authenticity of API requests. The Installation ID identifies the specific installation of the app within the organization, determining which repositories and resources the app can access.

During runner registration, the EC2 instance uses these credentials to generate a signed JWT token with the App ID and private key. This JWT token is then exchanged with GitHub’s API using the Installation ID to obtain a short-lived access token. Finally, this access token is used to request a runner registration token, which allows the instance to register itself as a self-hosted runner with the organization. This multi-step authentication process ensures that only authorized infrastructure can register runners while maintaining security through time-limited credentials and cryptographic verification. For more information, please refer to GitHub App documentation.

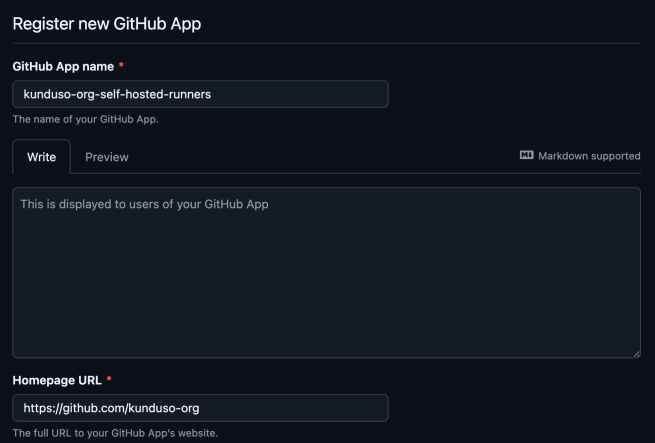

To create a new GitHub App in your organization, navigate to the GitHub organization settings → Developer settings (scroll all the way down) → GitHub Apps → New GitHub App. Provide a unique name and a homepage URL, as shown in the screenshot below.

Please note that the Homepage URL doesn’t need to be the GitHub organization URL for this use case. The Homepage URL is a reference field that GitHub displays in the app’s public information; it’s not functionally required for self-hosted runner authentication.

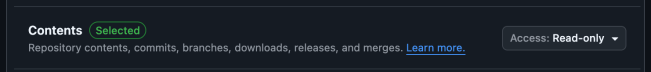

After creating the app, the next step is to configure permissions. There are two types of permissions: repository and organization. Below are the Repository permissions to enable:

And the following are the Organization permissions:

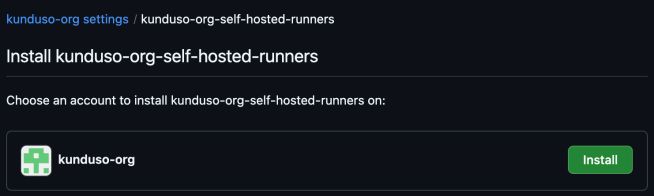

Then, install the app. On the right-hand panel, select “Install App”.

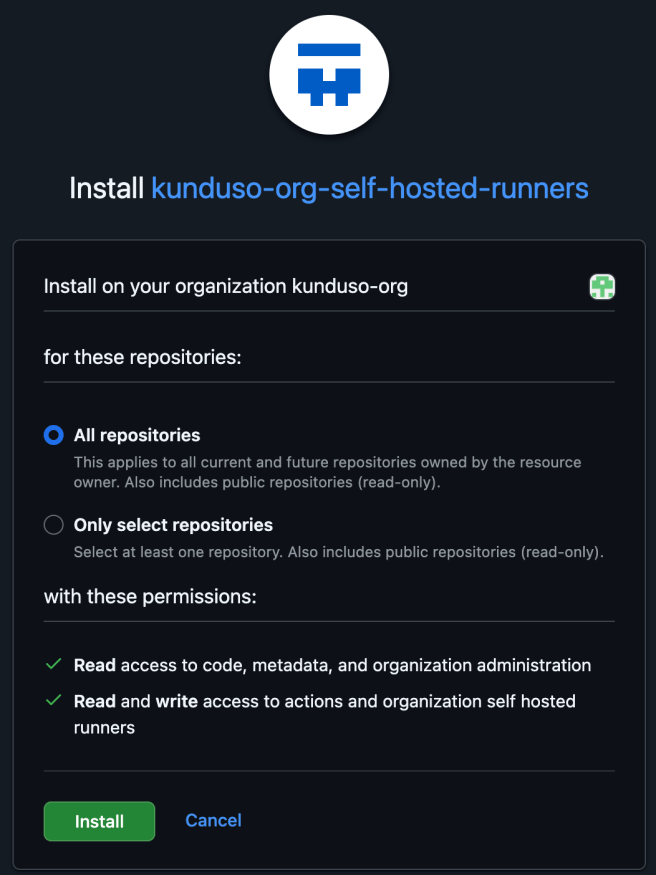

Click on Install. This will open a new page similar to the one below, where you’ll have to confirm the repositories that this app will have access to.

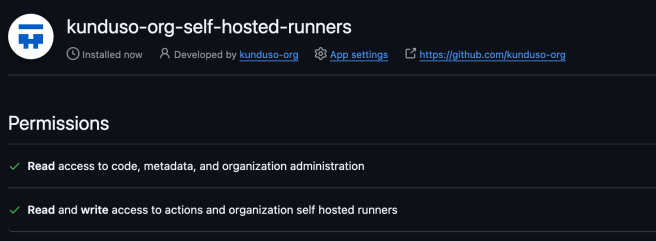

Since this request was for a self-hosted GitHub runner, it was installed for all the repositories in the organization. Confirm and click on Install. You will then see the image below, which displays the name of the app and its permissions.

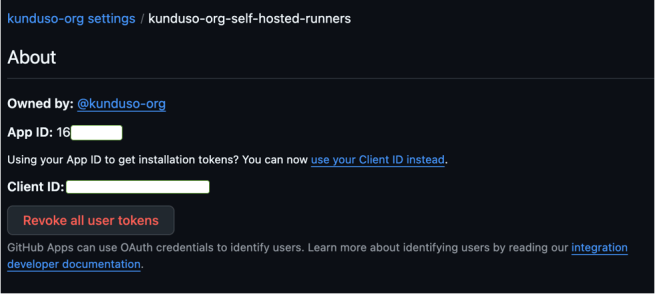

In the URL https://github.com/organizations/kunduso-org/settings/installations/12345678, the number 12345678 is the Installation ID. On the same page, click on the “App settings” button. That will display the App ID. Please note that too. These are sensitive, so please do not share them.

The last information is the private key. To get that:

1. On your GitHub App page, scroll down to the “Private keys” section

2. Click “Generate a private key”

3. A .pem file will automatically download to your computer

4. Open this file in a text editor to review its contents.

Now that you have all three GitHub App credentials, the next step is to securely store them in AWS Secrets Manager for use by your EC2 instances.

Summary – What you should have:

– ✅ App ID (from the app settings page)

– ✅ Installation ID (from the installation URL)

– ✅ Private Key (downloaded .pem file)

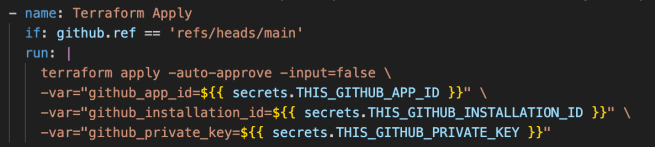

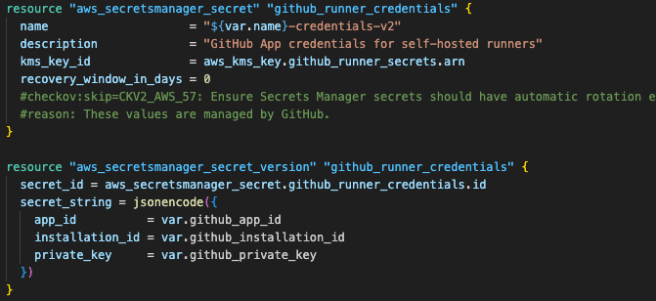

The Amazon EC2 instances require these variables to authenticate to the GitHub API during the registration process. Due to their nature (sensitive), a secure mechanism to access these is to store them as AWS Secrets Manager secret and provide the Amazon EC2 instance role the permissions to read the secret. A secure mechanism for passing these variables into the AWS Secrets Manager secret resource is to store them as GitHub secrets and reference them in the Terraform provisioning pipeline. The code snippet below shows how to do that.

If you are new to GitHub secrets and want to learn how to pass them into an AWS Secrets Manager secret in a secure approach using GitHub Actions and Terraform, please check create-aws-secrets-manager-secret-using-terraform-secure-variables-and-github-actions-secrets.

That brings us to the end of section 1. By this time, you have the App ID, the Installation ID, and the Private Key, all of which are stored as GitHub organization secrets.

Implementation-2. Provision Runner Infrastructure

In the previous section, we created the sensitive variables and learned how to store them in the AWS Secrets Manager secret. In this section, we’ll review the AWS cloud resources required to provision the Amazon EC2 instances, which will run as the GitHub self-hosted runners. There are broadly seven categories of resources required. These are:

1. Establish network layout with VPC

2. Implement encryption with KMS keys

3. Provision shared storage with EFS

4. Configure monitoring

5. Store secrets and configuration parameters

6. Configure IAM roles and permissions

7. Deploy compute resources with Auto Scaling

Let’s review this in detail, starting with the network.

Implementation-2.1. Establish network layout with VPC

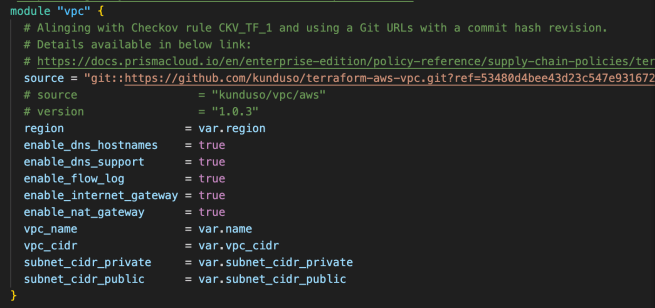

This use case calls a module to provision the network components.

This module, with the specified variables, provisions a VPC with four subnets —two private and two public —spread across two availability zones. It also creates an internet gateway and associates that with the public subnets, creates two NAT gateways across the two public subnets, and updates the private subnet route table to access the internet using the NAT gateways. There are two security groups (not shown here), one to allow traffic for the EFS and the other to allow traffic for the Amazon EC2 instance.

Implementation-2.2. Enable encryption with KMS for all supported resources

Three KMS keys and policies are created to support encryption. These are for the CloudWatch Logs, Secrets Manager secret, and the SSM Parameter Store parameter. Each KMS key also has an associated key policy to restrict access to the key.

Implementation-2.3. Provision shared storage with EFS

Amazon Elastic File System (EFS) provides persistent, shared storage that survives instance termination and can be accessed simultaneously by multiple runner instances.

The EFS file system is mounted at /home/runner/_work on each runner instance.

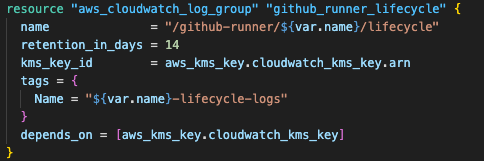

Implementation-2.4. Configure comprehensive monitoring

The CloudWatch log groups capture the complete runner lifecycle with structured logging.

The logging structure provides visibility into three key phases:

Registration: Instance startup, GitHub authentication, and runner registration

Execution: GitHub Actions job processing and performance metrics

De-registration: Cleanup processes when instances terminate.

To learn more about how to integrate CloudWatch log group into the user_data script, check install-and-configure-cloudwatch-logs-agent-on-amazon-ec2.

Implementation-2.5. Store secrets and configuration parameters

AWS Secrets Manager securely stores the GitHub App credentials with automatic encryption.

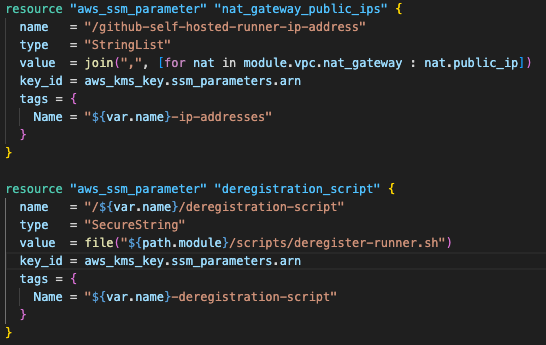

SSM Parameter Store holds configuration data and operational scripts. The deregistration script is stored as an SSM parameter to keep the user data script manageable in size, as embedding the entire deregistration logic would make the launch template unwieldy. This script is essential for the automated cleanup process (covered in a subsequent post) that ensures proper de-registration of runners when instances terminate.

The user_data script retrieves this deregistration script during instance initialization and configures it as a systemd service for execution during shutdown. This approach separates sensitive credentials from configuration data while maintaining encryption for both, and keeps the infrastructure code modular and maintainable.

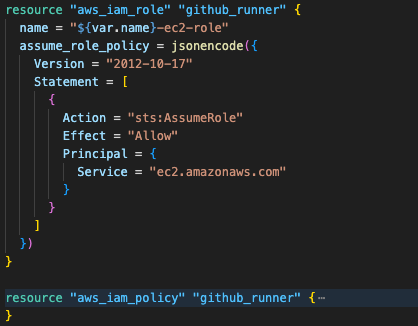

Implementation-2.6. Configure IAM roles and permissions

This solution requires two distinct IAM roles with different purposes and security scopes.

Implementation-2.6.1 EC2 Instance Role

This is the primary role attached to EC2 instances, which provides the minimum permissions required for runner operations during the registration process.

The IAM policy follows the principle of least privilege, granting only necessary permissions:

Secrets Manager: Read access to GitHub App credentials

KMS: Decrypt permissions for encrypted resources

EFS: Mount and write permissions for shared workspace

SSM: Read access to configuration parameters

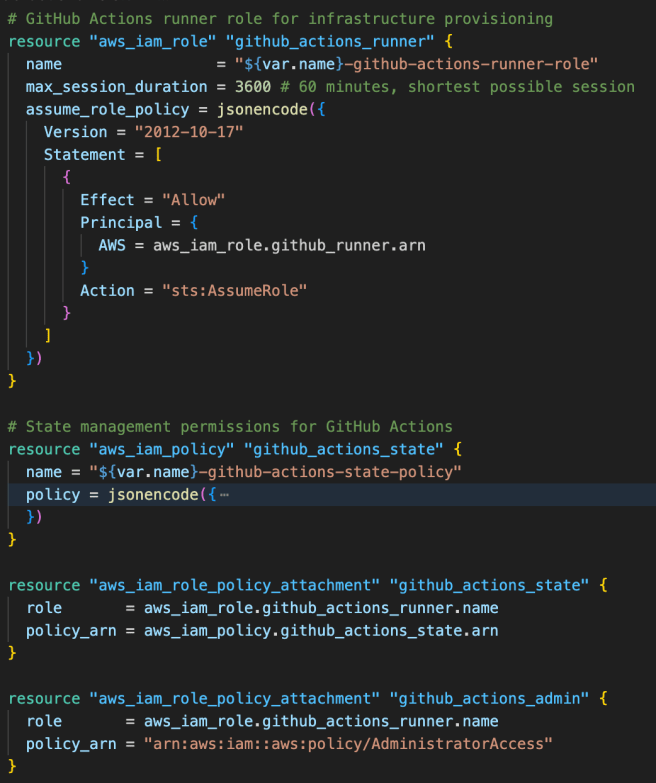

Implementation-2.6.2 GitHub Actions Assumable Role

This is a separate role that enables GitHub Actions workflows running on the self-hosted runners to perform infrastructure operations.

This role must be assumed by EC2 instances during GitHub Actions workflows, providing temporary elevated permissions with a limited session duration (60 minutes) for enhanced security. The role includes:

AdministratorAccess: Full AWS permissions for infrastructure management

S3 State Access: Specific permissions for Terraform state management

Time-Limited Sessions: Maximum 1-hour session duration to minimize security exposure

This dual-role approach provides several security advantages:

1. Separation of Concerns: Day-to-day runner operations use minimal permissions

2. Temporary Elevation: Administrative access only during workflow execution

3. Time Constraints: Limited session duration reduces risk of credential compromise

4. Audit Trail: Clear distinction between operational and administrative activities

The EC2 instances operate with minimal permissions by default, assuming elevated privileges only when requested by GitHub Actions workflows.

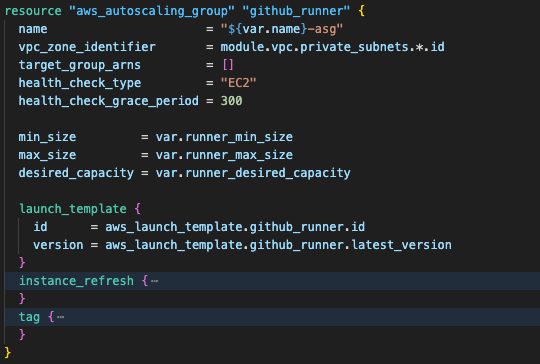

Implementation-2.7. Deploy compute resources with Auto Scaling

The last resource is the Auto Scaling Group, which ensures consistent runner availability.

The launch template defines the instance configuration and includes the user_data script that handles:

1. System package installation (Docker, Terraform, development tools)

2. EFS mounting with optimized NFS parameters

3. GitHub App authentication and JWT token generation

4. Runner registration with the GitHub organization

5. Service configuration for automatic startup

This infrastructure provides a robust, scalable foundation for GitHub Actions runners with enterprise-grade security, monitoring, and reliability features.

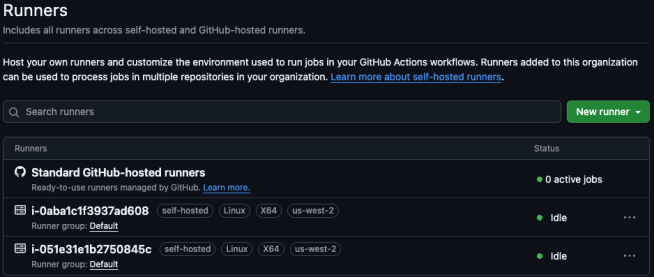

Once the Terraform code was ready, it was deployed using GitHub’s hosted runners. After deployment, the Amazon EC2 instances were registered as GitHub self-hosted runners.

Deployment and Validation

After successful deployment, the Amazon EC2 instances automatically registered themselves as GitHub self-hosted runners with the organization.

Verification-1: Successful Runner Registration

To view the registered runners, navigate to the organization settings, expand “Actions” under the “Code, planning, and automation” section, and then click on “Runners”. The following screenshot shows the self-hosted runners successfully registered and available in the GitHub organization:

Each runner is identified by its EC2 instance ID and shows the configured labels (region-based) for workflow targeting.

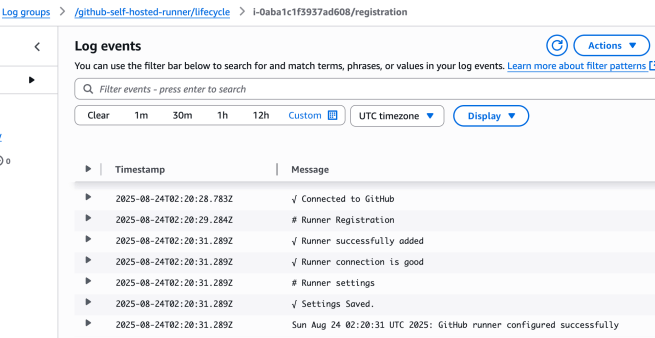

Verification-2: CloudWatch Logging Verification

The comprehensive logging system implemented captures the complete runner lifecycle. The CloudWatch logs show successful registration and execution events.

The structured logging provides visibility into:

Registration Phase: Instance startup, GitHub authentication, and runner registration

Execution Phase: GitHub Actions job processing and performance metrics

Operational Events: EFS mounting, service configuration, and health checks

Security Best Practices Implemented

This solution incorporates multiple layers of security following AWS and GitHub best practices to ensure enterprise-grade protection. These are:

Security-Best-Practice-1. Network Security:

Runners operate without direct internet exposure

Controlled outbound internet access for package downloads

Minimal required ports and protocols only

Security-Best-Practice-2. Encryption at Rest and in Transit:

All data is encrypted with organization-controlled keys

Shared workspace data protected at rest (uses AWS-managed keys)

GitHub App credentials are encrypted and access-controlled

Monitoring data encrypted with dedicated KMS keys

Configuration data encrypted with customer-managed keys

Security-Best-Practice-3. Identity and Access Management:

Minimal permissions with EC2 instances for day-to-day operations

GitHub App JWT tokens with short expiration (10 minutes)

Distinct roles with EC2 instance for operational vs. administrative tasks

60-minute maximum for elevated permissions

Security-Best-Practice-3. Operational Security:

Secure GitHub App-based authentication eliminates long-lived tokens

Complete audit trail for compliance and troubleshooting

Version-controlled, reviewable infrastructure changes

GitHub App credentials stored securely in AWS Secrets Manager with encryption

Conclusion

That brings us to the end of this comprehensive guide on building secure, scalable GitHub self-hosted runners on AWS. We’ve covered the complete infrastructure setup from GitHub App authentication through Auto Scaling Groups, encryption, monitoring, and IAM security.

This implementation provides a production-ready foundation that addresses the key challenges of self-hosted runner management: security, scalability, and operational efficiency. The solution eliminates common pitfalls, such as long-lived credentials, while providing enterprise-grade features, including comprehensive logging, encrypted storage, and automated scaling.

In our next post, we tackle the critical challenge of automated runner deregistration – ensuring proper cleanup when instances terminate to prevent orphaned runners and maintain a clean GitHub organization. We explore how AWS Lambda functions, Auto Scaling lifecycle hooks, and SNS notifications work together to create a robust cleanup mechanism that handles various termination scenarios, including planned scaling events, instance failures, and spot instance interruptions.

If you found this guide helpful, please try it in your own environment and share your experiences. The complete code is available in the GitHub repository: kunduso-org/github-self-hosted-runner-amazon-ec2-terraform for you to explore and adapt to your specific needs.

One thought on “Build Secure GitHub Self-Hosted Runners on Amazon EC2 with Terraform”