When it comes to identifying a process to deliver continuous value to customers, CI-CD is the defacto standard. And container technology enables that by encapsulating an application and its dependencies into a package that can be hosted and scaled independently of other applications. So DevOps engineers and application developers merged these ideas to forge a concept to create a CI-CD process to deploy containers.

At a high level, a typical CI-CD cycle involving containers comprises the following steps:

Step 1: create the application code (or an executable depending on the technology) that will be hosted in a container by a specific cloud provider,

Step 2: create and tag a docker image that contains the application (or executable) and all dependencies,

Step 3: upload the docker image into an image repository, and

Step 4: create a container out of the image that is stored in the image repository.

In this note and the associated GitHub repository, I share the first three steps of the concept and code to create a continuous integration process to upload a docker image into an image repository in Amazon Elastic Container Registry (Amazon ECR) using Azure pipelines, Docker CLI, and AWS Tools for PowerShell. If you are familiar with these underlying technologies, you should be able to do the same by the end of this note.

There are a couple of prerequisites to this process – an IAM user with permission to push images to the Amazon ECR image repository and the image repository to host the image.

Pre-requisite 1: I followed the documentation available at aws-docs-image-push-iam-policy and created an IAM policy from the iam-policy.json file with appropriate permissions.

Note: This file is available in the .\iam-policy folder in my GitHub repository: kunduso/app-one.

As you can see in the image above, the policy file has a couple of extra permissions: ecr:CreateRepository, ecr:DescribeRepositories. These permissions allow the IAM user to create an Amazon ECR repository.

I then associated the IAM policy with an IAM user whose credentials were stored securely in Azure DevOps Library. If you are new to the Azure DevOps library and want to know how to access secure variables via Azure Pipelines, check out this note –Manage secure variables with Azure DevOps Library and Azure Pipelines.

Pre-requisite 2: Although I could have created the Amazon ECR repository in advance, I addressed that in the azure-pipelines.yml file via a check. I have an image of the code from my GitHub repository below. I checked to see if a repository exists, and if not, create it. Hence, I required the two additional permissions in the iam-policy.json file above.

The following steps were to automate creating and pushing a docker image into the Amazon ECR repository. However, since I used the azure-pipelines.yml file, there were a couple more steps to follow.

Variables:

I created a library variable to securely store the access_key, secret_key and aws_account_number associated with the IAM user. In addition, this IAM user had the iam-policy attached to communicate with the Amazon ECR repository.

Install AWS PowerShell Tools:

I used the AWS Tools for PowerShell and hence automated the process of installing all the required modules. You may read more about that at Install AWS.Tools. The InstallAWSTools.ps1 PowerShell script in my GitHub repo has all the details.

Set AWS Credentials:

The next step was to create a local profile that was referenced by the subsequent steps to interact with the AWS cloud API. The details are available at specifying-your-aws-credentials.

Check and create an Amazon ECR repository:

I mentioned that above under prerequisites. Once an AWS profile was available, I checked if an Amazon ECR repository existed before creating a new one. Idempotency is at play here.

Remove AWS Credentials:

The last step in the azure-pipelines.yml file was to remove the credentials. Such that even if any of the above steps failed due to specific errors, it would continue due to the condition: always() rule. You may read more at yaml-specify-condition.

Now that the additional pieces of necessary information are out of the way, let us focus on the core CI steps to create and push a docker image to an Amazon ECR repository.

Step 1: Create the application code that will be hosted in a container

For this project, I used a simple static app. The code for the same is in the \app folder of my GitHub repository: kunduso/app-one.

Step 2: Create and tag a docker image

The docker file has the details.

As you can see from the image, the docker image is based on an alpine image where I copied the files from the app folder, run npm install, and provide an ENTRYPOINT.

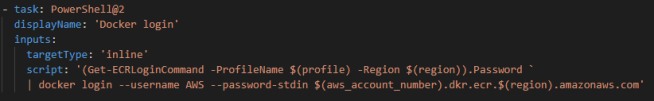

However, before docker build, I was required to authenticate docker CLI to my default registry -AWS ECR. That was possible using Get-ECRLoginCommand.

The build and tag steps are relatively self-explanatory.

Step 3: Upload the docker image into an image repository

I used the docker push command for this step.

And once I completed all the docker CLI steps, I added the docker logout step to close the session.

And as I mentioned above, the last step in the azure-pipelines.yml file was to remove the profile that stored the AWS Credentials. All the AWS.Tools used the profile for PowerShell command using the ProfileName flag.

And that brings us to the end of this note. I know that there are a lot of detailed pieces of information here, and I created it so that you can find all the relevant information here.

I provided code snippets to explain the process and underlying concept throughout this note. You can find the complete code at my GitHub repository: kunduso/app-one, and the Azure pipelines build logs at Open-Project\kunduso.app-one. In addition, you can access the latest build log and walk through the steps comparing the tasks listed in the azure-pipelines.yml file.

Last but not least, here is the docker image in my Amazon ECR app-one repository.

I hope this note helped you understand how to build and push a docker image into Amazon ECR and how to do the same using a secure process with Azure pipelines. If you have any questions or suggestions, please reach out to me using the Contact page.